Motivation

The hardware of Protato Kit is designed to collect a continuous stream of data, including biochemical markers and imagery from the plant. However, raw data on its own lacks meaning; its value can only be unlocked when it is processed, visualized, and made interpretable for humans. The primary goal of the Protato app is to turn the raw data collected from our hardware into clear and useful results. The app has several functions:

- Converting complex sensor readings into user-friendly visual graphs

- Providing a live feed for remote monitoring

- Utilizing machine learning to create an automated diagnostic tool.

In essence, the Protato app is what makes our hardware's data meaningful, empowering users to make informed decisions about crop health.

Usage Guide

Getting started with our application is a straightforward process. First, the user needs to power on the hardware device and ensure it is connected to the same local Wi-Fi network as their mobile phone. Then, upon launching the mobile application, the user can navigate to the device management screen and initiate a network scan. After selecting the desired device from the list, the application will establish a connection, and the user can begin monitoring.

The complete source code for our mobile application has been made publicly available. We invite future iGEM teams, researchers, and developers to explore, use, and build upon our work. The repository can be accessed on our team's GitLab.

Development

Our development followed a clear, step-by-step process to ensure the final application was well-made and focused on the user's needs.

Phase 1: Design and Prototyping

Before writing any code, we focused on identifying user needs and mapping out the entire user journey. The image below was the initial simple low-fidelity wireframes for each screen, which served as a crucial blueprint for the subsequent development stages.

Phase 2: Technical Framework and Setup

We opted to build the application using the Ionic framework paired with Vue.js. This decision was driven by the need for cross-platform compatibility, enabling us to develop a single codebase that could be deployed natively on both Android and iOS devices.

Phase 3: Core Feature Implementation

Our initial focus was on setting up the application's fundamental functionalities, which included establishing a robust mechanism for discovering and connecting to our hardware devices over a local Wi-Fi network using the HTTP protocol. We also developed systems for handling real-time data streaming from the sensors and created a responsive interface for visualizing this data through graphs and status indicators.

Phase 4: AI Model Integration and Testing

We integrated our pre-trained machine learning model, specifically designed for tomato leaf disease classification, directly into the application. This local processing of image analysis not only enhances user privacy but also enables offline functionality. After integration, we conducted extensive internal testing to validate the overall system functionality. Feedback gathered from our team members during these tests was used to make final adjustments to enhance the user interface and overall experience.

Architecture

The architecture of our software is designed for simplicity, reliability, and efficiency, ensuring seamless communication between the hardware and the mobile application within a local environment.

The overall architecture defines a clear and direct data flow. The process begins at the plant, where our hardware's suite of sensors and integrated camera collect raw data and images. This information is processed by the hardware, which then acts as a local server, making the data accessible over the local Wi-Fi network. The mobile application, running on a user's smartphone or tablet, communicates with the hardware by sending HTTP requests to its local IP address. This client-server model, confined to the local network, ensures a quick and stable connection without reliance on internet access or external cloud services.

We carefully selected our technology stack to meet the project's specific needs. The primary development framework combines Ionic and Vue.js. Ionic provides native UI components and tools for compiling to both Android and iOS, while Vue.js offers a progressive and efficient JavaScript framework for building the user interface's logic and reactivity. This combination allowed us to create a robust and user-friendly application.

The application takes a privacy-centric approach to data management by using an on-device storage model. All sensor readings and user-generated data are stored only in the local storage of the user's device. This design eliminates the need for user accounts and remote servers, enhancing data security and making the application fully functional in offline environments. For those looking to conduct in-depth analysis, we have included a handy feature that allows users to export their historical sensor data in .csv format.

At the heart of our diagnostic feature is a machine learning model built through a two-stage process. We first utilize YOLOv5 for its advanced object detection capabilities to locate tomato leaves in any given image. Once a leaf is detected, it is then passed to a custom-trained Convolutional Neural Network (CNN) developed with TensorFlow, which performs disease classification. This entire model is packaged within the application to run completely on the device.

Key Features

The application is equipped with a suite of powerful features designed to make plant health monitoring intuitive and effective.

Seamless Device Management

The application simplifies the initial setup process by automatically scanning the local network for available hardware devices. The user is presented with a list of discovered devices and can connect to them with a single tap, streamlining what is often a complex configuration process. This makes the system highly accessible even for users with limited technical expertise.

Real-Time Monitoring

Once connected, the user is presented with the real-time monitoring dashboard. This screen serves as the central hub for at-a-glance information about a specific plant. It features a live video feed from the hardware's camera, providing immediate visual context of the plant's condition. Alongside the video, the dashboard displays the current status and readings from all connected sensor points, offering a comprehensive overview of the plant's physiological state.

AI-Powered Disease Diagnosis

The most advanced feature of our application is the AI-powered disease diagnosis. This functionality leverages our machine learning model to automatically identify ten different types of common tomato leaf diseases, as well as healthy leaves, directly from images. The model was trained and validated using the extensive PlantVillage dataset, a public repository of plant health images, where it achieved a high level of accuracy in its classifications. In practice, the application analyzes the video feed or captured images, identifies individual leaves, and overlays the diagnostic result directly onto the image. This provides the user with immediate, clear, and actionable visual feedback, transforming the diagnostic process from a manual task to an automated, data-driven one.

YOLOv5 is chosen for object detection, combined with transfer learning and pretrained weights to accelerate convergence and improve detection performance with small samples. For disease classification, a TensorFlow-based CNN is applied, with a structure inspired by classic VGG and ResNet architectures, adjusted for the actual data volume and computational resources. Early stopping is introduced during training to prevent overfitting.

To validate the performance of our classification model, we tracked its accuracy and loss on both the training and validation datasets throughout the training process. The results, plotted over 32 epochs, are shown below.

The graph on the left illustrates the model's accuracy. Both the training accuracy (blue line) and the validation accuracy (orange line) steadily increase and converge at a high level, reaching approximately 95% by the end of the training. This indicates that the model effectively learned to correctly classify the images.

The graph on the right shows the model's loss, which represents the error in its predictions. As expected, both training and validation loss decrease significantly over time and stabilize at a very low value. The close alignment between the training and validation curves in both plots is crucial, as it demonstrates that our model generalizes well to new, unseen data and does not suffer from overfitting. These results confirm that our trained model is both accurate and reliable, making it a robust tool for our diagnostic application.

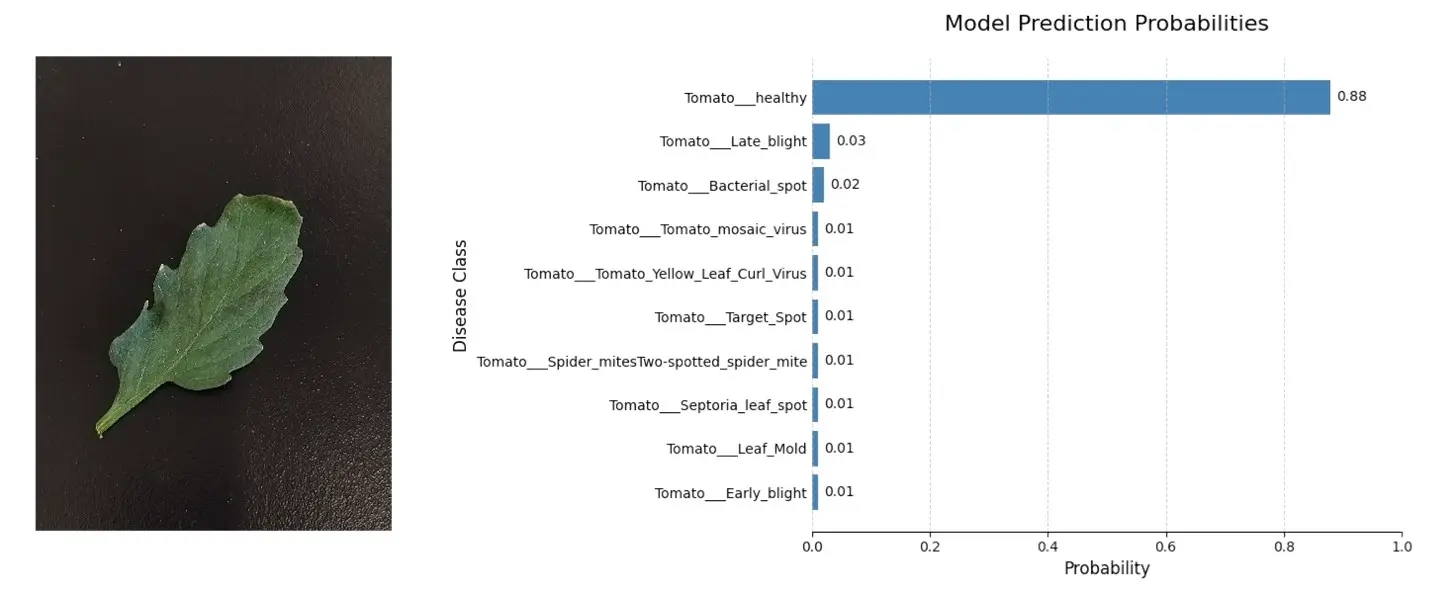

To demonstrate the model's practical application, we tested it on a photograph of a healthy tomato leaf captured by our team. As the results in Fig.6 show, the model classified the leaf as "Healthy" with a high confidence score of 88%. In contrast, the probabilities assigned to all other disease categories were negligible.

These results demonstrate that the system can achieve efficient, accurate, and visualized diagnosis of tomato leaf diseases in real production environments, providing a powerful tool for smart agriculture and precision crop protection.

Future Work

While our current system provides a robust foundation for intelligent plant monitoring, we have a clear and ambitious roadmap for future enhancements.

A key priority is the implementation of automated monitoring. We plan to develop a feature that allows the hardware to automatically capture and analyze images at user-defined intervals. This would create a continuous health record of the plant over time, enabling the tracking of disease progression and the early detection of subtle changes that might be missed by manual checks. We also intend to focus on performance optimization. This will involve conducting detailed performance benchmarks of the on-device AI model across a wide range of mobile devices to ensure a smooth and responsive user experience for everyone.

Furthermore, we aim to enhance the robustness of the application. This includes developing more sophisticated error handling mechanisms to gracefully manage potential issues such as intermittent network connectivity or hardware malfunctions, providing clearer feedback to the user in such scenarios.

Finally, we envision the platform's extensibility as a core long-term goal. Although the current machine learning model is specifically trained for tomato diseases, the underlying software and hardware framework are inherently modular and adaptable. By integrating new sensor types and training new models with different datasets, this system could be easily extended to monitor a vast array of other plants and agricultural conditions, making it a versatile tool for the future of precision agriculture.

References

- David Hughes, Marcel Salathé, et al. (2015). An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv preprint arXiv:1511.08060, 2015. https://doi.org/10.48550/arXiv.1511.08060 https://plantvillage.psu.edu/